Grassmann extrapolation of density matrices for Born-Oppenheimer molecular dynamics

Abstract

Born–Oppenheimer Molecular Dynamics (bomd) is a powerful but expensive technique. The main bottleneck in a density functional theory bomd calculation is the solution to the Kohn–Sham (ks) equations, that requires an iterative procedure that starts from a guess for the density matrix. Converged densities from previous points in the trajectory can be used to extrapolate a new guess, however, the non-linear constraint that an idempotent density needs to satisfy make the direct use of standard linear extrapolation techniques not possible. In this contribution, we introduce a locally bijective map between the manifold where the density is defined and its tangent space, so that linear extrapolation can be performed in a vector space while, at the same time, retaining the correct physical properties of the extrapolated density using molecular descriptors. We apply the method to real-life, multiscale polarizable qm/mm bomd simulations, showing that sizeable performance gains can be achieved, especially when a tighter convergence to the ks equations is required.

1 Introduction

Ab-initio Born–Oppenheimer molecular dynamics (bomd) is one of the most powerful and versatile techniques in computational chemistry, but its computational cost represents a big limitation to its routine use in quantum chemistry. To perform a bomd simulation, one needs to solve the quantum mechanics (qm) equations, usually Kohn–Sham (ks) density functional theory (dft), at each step, before computing the forces and propagating the trajectory of the nuclei. The iterative self-consistent field (scf) procedure is expensive, as it requires to build at each iteration the ks matrix and to diagonalize it. Convergence can require tens of iterations, making the overall procedure, which has to be repeated a very large number of times, very expensive. To reduce the cost of bomd simulations, it is therefore paramount to be able to perform as little iterations as possible while, at the same time, obtaining an scf solution accurate enough to afford stable dynamics.

From a conceptual point of view, at each step of a bomd simulation, a map is built from the molecular geometry to the scf density, and then to the energy and forces. The former map, in practice, requires the solution to the scf problem and is not only very complex, but also highly non-linear. However, the propagation of the molecular dynamics (md) trajectory uses short, finite time steps, so that the converged densities at previous steps, and thus at similar geometries, are available. As a consequence, the geometry to density map can be in principle approximated by extrapolating the available densities at previous steps. The formulation of effective extrapolation schemes has been the object of several previous works [18]. Among the proposed strategies, one for density matrix extrapolation was developed by Alfè ], as a generalization of the wavefunction extrapolation method by Arias et al. ], which is based on a least-squares regression on a few previous atomic positions. The main difficulty in performing an extrapolation of the density matrix stems from the non-linearity of the problem. In other words, a linear combination of idempotent density matrices is not an idempotent density matrix, as density matrices are elements of a manifold and not of a vector space. To circumvent this problem, strategies that extrapolate the Fock or ks matrix [38, 22] or that use orbital transformation methods [23, 42, 43] have been proposed.

A completely different strategy has been proposed by Niklasson and coworkers [30, 31, 29]. In the extended Lagrangian Born–Oppenheimer (xlbo) method, an auxiliary density is propagated in a time-reversible fashion and then used as a guess for the scf procedure. The strategy is particularly successful, as it combines an accurate guess with excellent stability properties. In particular, the xlbo method allows one to perform accurate simulations converging the scf to average values (for instance, in the root-mean-square (rms) norm of the density increment), which are usually insufficient to compute accurate forces. An xlbo-based bomd strategy has been recently developed by some of us in the context of polarizable multiscale bomd simulations of both ground and excited states [25, 26, 35, 11]. Multiscale strategies can be efficiently combined, in a focused model spirit, to bomd simulations to extend the size of treatable systems. Using a polarizable embedding allows one to achieve good accuracy in the description of environmental effects, especially if excited states or molecular properties are to be computed. In such a context, the xlbo guessing strategy allows one to perform stable simulation even using the modes rms convergence threshold, which, thanks to the quality of the xlbo guess, typically requires only about scf iterations. Recently, scf-less formulations of the xlbo schemes have also been proposed.[32, 33]

Unfortunately, the performances of the xlbo-based bomd scheme are not so good when a tighter scf convergence is required, which can be the case when one wants to perform md simulations using post Hartree–Fock (hf) methods or for excited states described in a time-dependent dft framework [35, 34]. In fact, such methods require the solution to a second set of qm equations which are typically non-variational, making them more susceptible to numerical errors and instabilities. Computing the forces for non-scf energies requires therefore a more accurate scf solution.

The present work builds on all previous methods for density matrix extrapolation and aims at proposing a simple framework to overcome the difficulties associated with the non-linearity of the problem. The strategy that we propose is based on a differential geometry approach and is particularly simple. First, we introduce a molecular descriptor, i.e., a function of the molecular geometry and other molecular parameters that represents the molecular structure in a natural way that respects the invariance properties of the molecule within a vector space. At the -th step of an md trajectory, we fit the new descriptor in a least-square fashion using the descriptors available at a number of previous steps and obtain a new set of coefficients. However, we do not use them to directly extrapolate the density. Instead, we first map the unknown density matrix, that we aim to approximate, from the manifold where it is defined to its tangent space. We then perform the extrapolation to approximate the representative density matrix in the tangent space, before mapping this approximation back to the manifold in order to obtain an extrapolated density matrix that satisfies the required physical constraints. This geometrical strategy, that has recently been introduced in the context of density matrix approximation by us [36], allows one to use standard linear extrapolation machinery without worrying about the non-linear physical constraints on the density matrix, since both the space of descriptors and the tangent space are vector spaces. As the mapping between the manifold and the tangent space is locally bijective, no concerns about redundant degrees of freedom (such as rotations that mix occupied orbitals) arise. The map and its inverse, which are known as Grassmann Logarithm and Exponential, are easily computed and the implementation of the strategy is straightforward. We shall denote this approach as Grassmann extrapolation (g-ext).

In this contribution, we choose a simple, yet effective molecular descriptor and, for the extrapolation, a least square strategy. These are not the only choices. As our strategy allows one to use any linear extrapolation technique between two vector spaces, which can be in turn coupled with any choice of molecular descriptor, more advanced strategies can be proposed, including machine learning. Our approach ensures that the extrapolated density, independent of how it is obtained, satisfies all the physical requirements of a density stemming from a single Slater determinant.

The paper is organized as follows. In the upcoming Section 2, we present all necessary theoretical foundations required for the development and implementation of the presented g-ext approach. Section 3 then presents detailed numerical tests illustrating the performance of the extrapolation scheme, including realistic applications of bomd within a qm/molecular mechanics (mm)-context before we draw the conclusion in Section 4.

2 Theory

We consider Born–Oppenheimer ab-initio bomd simulations where the position vector evolves in time according to classical mechanics as

| (1) |

where denote the position of the -th atom with mass respectively the force acting on it at time . We consider a general qm/mm-method but the setting also trivially applies to pure qm-models. The forces at a given time and position of the nuclei arise from different interactions, namely qm-qm, qm-mm and mm-mm interactions. The computationally expensive part is to determine the state of the electronic structure, which is modelled here at the dft level with a given basis set of dimension . Note that considering hf instead of dft would not change much in the presentation of the method. It consists of computing the instantaneous non-linear eigenvalue problem

| (2) |

where and denote the coefficients respectively of the occupied orbitals and density matrix and the diagonal matrix containing the energy levels. Further, denotes the dft-operator acting on the density matrix and the customary overlap matrix.

At this point it is useful to note that the slightly modified coefficient matrix belongs to the so-called Stiefel manifold defined as follows

| (3) |

due to the second equation in Equation (2). In consequence the normalized density matrix belongs to the following set

| (4) |

which can be identified with the Grassmann manifold of -dimensional subspaces of by means of the spectral projectors. In the following, we always assume that the density matrix has been orthonormalized, and therefore drop the from the notation. For any , one can associate the tangent space which has the structure of a vector space. The evolution of the electronic structure can therefore be seen as a trajectory on where denotes the trajectory of the nuclei.

The goal of the present work is to find a good approximation for the electronic density matrix at the next step of md trajectory by extrapolating the densities at previous steps. More precisely, based on the knowledge of the density matrices , , at previous times , one aims to compute an accurate guess of the density matrix at time .

Thus, the problem formulation can be seen as an extrapolation problem of the following form: given the set of couples and a new position vector , provide a guess for the solution . Here and in the remaining part of the article, we restrict ourselves on the positions of the qm-atoms, i.e., with slight abuse of notation we denote from now on by the set of qm-positions only, even within a qm/mm-context.

In order to approximate the mapping , we split this mapping in several sub-maps that will be composed as follows:

| (5) |

where the first line shows the concatenation of maps in terms of spaces and the second in terms of variables. The different mappings will be presented and motivated in the following.

The first map is a mapping of the nuclear coordinates to a (possibly high-dimensional) molecular descriptor that accounts for certain symmetries and invariances of the molecule. The last map, known as the Grassmann exponential, is introduced in order to obtain a resulting density matrix belonging to and thus to guarantee that the guess fulfils all properties of a density matrix. As is a manifold this is not straightforward. The second mapping is the one that we aim to approximate but before we do that, let us first introduce those two special mappings, i.e., the molecular descriptor and the Grassmann exponential, in more details.

2.1 Molecular descriptors

The map is a map from atomic positions to molecular descriptors. These descriptors are used as fingerprints for the considered molecular configurations. Such molecular descriptors have been widely used in the past decades e.g., to learn potential energy surfaces (pes) [7, 41, 4, 8, 27, 15, 14], or to predict other quantities of interest. Among widely used descriptors, one can find Behler–Parinello symmetry functions [6], Coulomb matrix [40], smooth overlap of atomic positions (soap) [20], permutationally invariant polynomials [12], or the atomic cluster expansion (ace) [16, 3]. These molecular descriptors are usually designed to retain similar symmetries as the targeted quantities of interest.

In this work, the quantity we are approximating is the density matrix, which is invariant with respect to translations as well as permutations of like particles. The transformation of the density matrix with respect to a global rotation of the system depends on the implementation, as it is possible to consider either a fixed Cartesian frame or one that moves with respect to the molecular system. In the former case, there is an equivariance with respect to rotations of the molecular system, while in the latter, the density matrix is invariant. We should therefore in principle use a molecular descriptor satisfying those properties.

However, the symmetry properties we will rely on are mostly translation and rotation invariance. Therefore, we will use a simple descriptor in form of the Coulomb-matrix denoted by , given by

| (6) |

Note that such a descriptor is not invariant (nor equivariant) with respect to permutations of identical particles. However, we have found this descriptor to offer a good trade-off between simplicity and efficiency. Note that since we aim to extrapolate the density matrix from previous time-steps, permutations of identical particles never occur from one time-step to another and we do not need to rely on this property. Nevertheless, we expect that a better description could be achieved by using more flexible descriptors, such as ace polynomials or the soap descriptors, where the descriptors themselves can be tuned.

2.2 The Grassmann exponential

We only give a brief overview as the technical details have already been reported elsewhere [17, 44, 36]. The set is a smooth manifold and thus, at any point, say in our application, there exists the tangent space such that one can associate nearby points to tangent vectors . The mapping is known as the Grassmann logarithm and its inverse mapping as the Grassmann exponential . There also holds that and . These mappings are not only abstract tools from differential geometry but can be computed by means of performing an singular value decomposition (svd) [17, 44, 36]. In our application we use the same reference point in all cases which brings some computational advantages as will be discussed in more detail the the upcoming Section 2.3.

2.3 The approximation problem

Since the tangent space is a (linear) vector space, we can now aim to approximate the mapped density matrix on the tangent space . To simplify the presentation, we shift the indices in the following and describe the extrapolation method for the first time steps. In the general setting, we should consider the positions for , to extrapolate the density matrix at position , where is the current time step of the md. We look for parameter functions , such that, given previous snapshots for from to , corresponding to some ’s, the approximation of any density matrix on the tangent space is written as

| (7) |

with .

The question is then how to find these coefficient functions and we propose to find those via the resolution of a (standard) least-square minimization problem. For a given position , we look for coefficients that minimise the –error between the descriptor and a linear combination of the previous ones

| (8) |

In matrix form, this simply reads

| (9) |

where is the matrix of size containing the descriptors . Note that we only fit on the level of the descriptor, i.e., the mapping from the position vector to the descriptor , and that this method is similar to the ones used by Arias et al. ], Alfè ], where the descriptors they used were the positions of the atoms and only considered the previous three time-steps of the md.

If the system is underdetermined, we select the vector that has the smallest norm. However, in general, the system is overdetermined as we have more descriptors than snapshots. This implies that this formulation verifies the interpolation principle: for every and from to , the solution of Problem (8) at the positions satisfies .

In principle, should we consider a large amount of previous descriptors, then the system may become undetermined and violates the interpolation principle. To mitigate this, we can use a stabilization scheme, as explained in the upcoming subsection.

Note that once we have computed the coefficients by solving Problem (12), one computes the initial guess for the density by using the same coefficients in the linear combination on the tangent space as in Equation (7) and finally take the exponential (see Equation (5)). The rational for this step is that, if the second mapping in Equation (5), that we denote here by , was linear, then there would hold

| (10) |

In practice, the mapping is however not linear and this approach works well in the test cases we considered. A possible explanation for this is the unfolding of the nuclear coordinates into a high-dimensional descriptor-space . Indeed, the high-dimensionality of seems to allow an accurate approximation of by a linear map. Further, if the system is overdetermined, the scheme satisfies the interpolation property , and hence we recover the expected density matrix .

2.3.1 Stabilization

To stabilize the extrapolation by limiting high oscillations of the coefficients, we apply a Tikhonov regularization

| (11) |

for some choice of . This problem is always well-posed, and corresponds to solving the following problem

| (12) |

where is the vector padded with zeros and is the matrix padded with the square diagonal matrix . We observe in practice that using such a stabilization makes possible to use more previous points without degradation of the initial guess.

2.4 The final algorithm

Given previous density matrices for , the initial guess is computed following Algorithm 1. That is, we start by computing the logarithms of the density matrices , from the coefficients that are first orthonormalized by performing . Here, we remark that we assume that the density matrices have been previously Löwdin orthonormalized.

We then compute the descriptors needed to build the matrix and solve Problem (12). This provides the coefficients in the linear combination of the on the tangent space. Finally, we compute the exponential of the linear combination in order to obtain the predicted density matrix.

Note that the reference point is chosen once and for all, which makes the computations of these logarithms lighter, even though there is no theoretical justification for keeping a single point as a reference. Indeed, it is known that the formulae are only correct locally (around ) on the manifold. However, in practice we have never observed the need to change the reference point. This enables us to compute only one logarithmic map per time step; and hence, only two svd in total per time step. To have a robust algorithm that will work even in this edge case, it will be sufficient to check that the exponential and logarithmic maps are still inverse of one another.

Finally, to be on the safe-side with respect to the computations of the exponential, we have added a check on the orthogonality of the matrix that is obtained: If the residue is higher than a certain threshold, we then perform an orthogonalization of the result.

3 Numerical tests

In this section we present a series of numerical tests of the newly developed strategy. We test our method on four different systems. All the systems have been object of a previous or current study by some of us, and can therefore be considered representative of real-life applications.

The first system is 3-hydroxyflavone (3hf) in acetonitrile [34]. Two systems (ocp and appa) are chromophores embedded in a biological matrix — namely, a carotenoid in the orange carotenoid protein (ocp) and flavine in acid phosphatase (appa), a blue light-using flavine photoreceptor [10, 9, 19]. The fourth system is dimethylaminobenzonitrile (dmabn) in methanol [35]. The main characteristics of the systems used for testing are recapitulated in Table 1.

| System | |||

|---|---|---|---|

| ocp | |||

| appa | |||

| dmabn | |||

| 3hf |

The systems used for testing include a quite large qm chromophore, the ocp and three medium-sized systems, embedded in large (appa, 3hf) and medium-sized environments (dmabn) and are representative of different possible scenarios.

To test the performances of the new g-ext strategy, we performed three sets of short () multiscale bomd simulations on ocp, appa, 3hf, and dmabn. ks density functional theory was used to model the qm subsystem, using the B3LYP [5] hybrid functional and Pople’s 6-31G(d) basis set [21]. For the stability and energy conservation of the method, we did a longer and more realistic simulations of on 3hf, where the flavone moiety was described using the B97X hybrid functional [13] and Pople’s 6-31G(d) basis set. In all cases, the environment was modeled using the AMOEBA polarizable force field [37].

All the simulations have been performed using the Gaussian–Tinker interface previously developed by some of us [25, 26]. In particular, we use a locally modified development version of Gaussian [gdv] to compute the qm, electrostatic and polarization energy and forces, and Tinker [39] to compute all others contributions to the qm/mm energy. We implemented the g-ext extrapolation scheme in Tinker, that acts as the main driver for the md simulation, being responsible of summing together all the various contributions to the forces and propagating the trajectory. At each md step, using the GauOpen interface [24], the density matrix, molecular orbital (mo) coefficients, and overlap matrix produced by Gaussian are retrieved. These are used to compute the extrapolated density as described in Section 2. The density is then passed back to Gaussian to be used for the next md step. All the simulations were carried out in the NVE ensemble, using the velocity Verlet integrator and a time step. Concerning stabilization, we found that good overall results were obtained using a parameter , where is the tolerance of the scf algorithm.

3.1 Numerical results

To assess the performance of the g-ext guess we perform md simulations on the four systems described in Section 3 starting from the same exact conditions (positions and initial velocities) and using various strategies to compute the guess density for the scf solver. We compare various flavors of the g-ext method with the the xlbo extrapolation scheme [31]. Here, we note that the original xlbo method performs a propagation of an auxiliary density matrix, which is then used as a guess. The latter is not idempotent: to restore such a property, we perform a purification step at the beginning of the SCF procedure using McWeeny’s algorithm [28]. In the following, we therefore compare our method, where we use to previous points for the fitting and extrapolation, to both the standard xlbo and to xlbo followed by purification (xlbo/mw). We use an scf convergence threshold of with respect to the rms variation of the density.

We report in Table 2, for each method, the average number of scf iterations performed along the md simulation together with the associated standard deviation. As the xlbo strategy requires previous points, during which a standard scf is performed, we discard the first points from the evaluation of the aforementioned quantities to have a fairer comparison.

We do not report the total time required to compute the guess, as it is in all cases very small (up to wall clock time for the largest system using the g-ext(6) guess). This is an important consideration, as the g-ext method requires one to perform various linear-algebra operations (in particular, thin svd) that can in principle be expensive. Thanks to the availability of optimized LAPACK libraries, this is in practice not a problem.

| ocp | dmabn | appa | 3hf | |||||

|---|---|---|---|---|---|---|---|---|

| Method | Average | Average | Average | Average | ||||

| xlbo | ||||||||

| xlbo/mw | ||||||||

| g-ext(3) | ||||||||

| g-ext(4) | ||||||||

| g-ext(5) | ||||||||

| g-ext(6) | ||||||||

From the results in Table 2, we see that the g-ext algorithms systematically outperforms the xlbo method. It is interesting to note that the McWeeny purification step has a sizeable effect on the performances of the xlbo method only for the largest system, ocp, where it results in the gain of almost one scf iteration on average. On the other systems, the purification step has a smaller effect.

In all the systems we tested, the performances of the g-ext method are systematically better than in xlbo, including with McWeeny purification. The effectiveness of the g-ext extrapolation increases when going from to points, but quickly stagnates. We have performed further tests with more than (up to ) extrapolation points, but never noted any further gain.

We observe a reduction in the number of iterations that goes from in dmabn to in ocp ( when compared to xlbo without McWeeny purification). We remark that these gains, while apparently not so large, are greatly amplified during the md simulation, due to the large number of steps that need to be performed.

The tests performed with a convergence threshold are representative of a standard, dft ground state bomd simulation. When performing a more sophisticated quantum mechanical calculation, such as a bomd on an excited state pes [34], such a convergence threshold may not be sufficient to guarantee the stability of the simulation, as the scf solution is used to set up the linear response equations and the numerical error can be amplified, resulting in poorly accurate forces.

We tested the g-ext algorithm in its best-performing version, the one that uses six extrapolation points, with a tighter, threshold, again for the rms variation of the density. The results are reported in Table 3, where we compare the g-ext(6) scheme with the xlbo method with McWeeny purification.

The xlbo method is based on the propagation of an auxiliary density and therefore the accuracy of the guess it generates depends little on the accuracy of the previous scf densities. As a consequence, its performances are reduced if a tighter convergence is required. The g-ext guess, on the other hand, uses previously computed densities as its building blocks and one can expect the accuracy of the resulting guess to be linked to the convergence threshold used during the simulation.

This is exactly what we observe. Using a threshold of , the g-ext(6) guess exhibits significantly better performances than xlbo, gaining, on average, from about to about scf iterations on the tested systems.

| ocp | dmabn | appa | 3hf | |||||

|---|---|---|---|---|---|---|---|---|

| Method | Average | Average | Average | Average | ||||

| xlbo/mw | ||||||||

| g-ext(6) | ||||||||

3.1.1 Stability

The good performances of the g-ext guess come, however, at a price, namely, the lack of time reversibility. We can thus expect the total energy in a NVE simulation to exhibit a long-time drift (ltd). Time reversibility and long-time energy conservation are, on the other hand, one of the biggest strengths of the xlbo method.

To investigate the stability of bomd simulations using the g-ext guess, we build a challenging case, where we start a bomd simulation far from well-equilibrated conditions. We use the 3hf system as a test case and achieve the noisy starting conditions by starting from a well-equilibrated structure and changing the dft functional from B3LYP to B97XD. This way, we have a physically acceptable structure, with no close atoms or other problematic structural situations, but obtain starting conditions that are far from equilibrium.

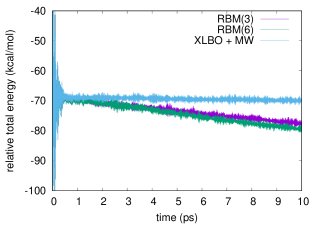

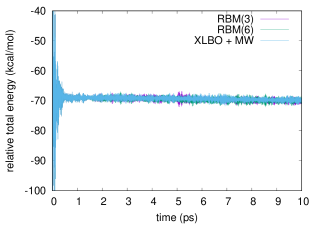

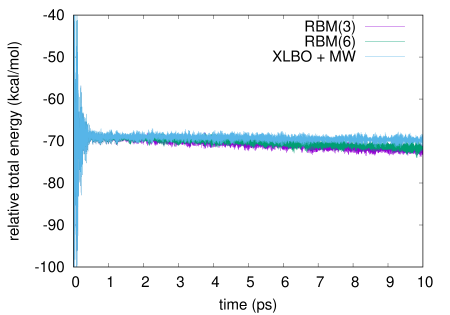

We report in Figure 1 the total energy along a bomd simulation of 3hf in acetonitrile using either a scf convergence threshold (left panel) or a one (right panel). The same results for a threshold are reported in the supporting information. We compare the g-ext(3) and g-ext(6) methods to the xlbo one including McWeeny purification. As already noted, while in principle the purification may spoil the time reversibility, this has no noticeable effect in practice.

The very noisy starting conditions are apparent from the energy profiles, that exhibits large oscillations in the first couple hundreds femtoseconds.

To better estimate the short- and long-time energy stability, we report in Table 4 the average short-time fluctuation (stf) and ltd of the energy. The former is computed by taking the rms of the energy fluctuation every and averaging the results over the trajectory, discarding the first , the latter by fitting the energy with a linear function and taking the slope.

All methods show comparable short-term stability, which is to be mainly ascribed to the chosen integration time-step. On the other hand, from both the results in Table 4 and Figure 1, we observe a clear drift of the energy when the g-ext method is used. In particular, the system cools of about with either g-ext(3) or g-ext(6). The xlbo trajectory, despite the McWeeny purification, exhibits an almost perfect energy conservation.

These results are not surprising, but should be taken into account when choosing to use the g-ext guess, which, if coupled to a scf convergence threshold, cannot guarantee long-term energy conservation. The drift is overall not too large and can be handled by using a thermostat. Whether or not the trade between performances and energy conservation is acceptable for a production simulation is a decision that ultimately lies with the user.

Increasing the accuracy of the scf computation improves the overall stability for g-ext, which is already good at and becomes virtually identical to the one offered by the xlbo method at .

| Conv. | Conv. | Conv. | ||||

|---|---|---|---|---|---|---|

| Method | stf | ltd | stf | ltd | stf | ltd |

| xlbo/mw | ||||||

| g-ext(3) | ||||||

| g-ext(6) | ||||||

|

|

4 Conclusion

In this contribution, we presented an extrapolation scheme to predict initial guesses of the density matrix for the scf-iterations within bomd. What makes our approach new is that we enforce the idempotency of the density matrix by extrapolating not the densities themselves, but their map onto a vector space, which is the tangent plane to the manifold of the physically acceptable densities. Such a map is locally bijective, so that after performing the extrapolation, we can map the new density back to the original manifold, providing thus an idempotent density. The main element of novelty of the algorithm is that, by working on a tangent space, it allows one to use any linear extrapolation technique, while at the same time automatically ensuring the correct geometrical structure of the density matrix. As such, the technique presented in this paper can be seen as a simple case of a more general framework. Such a framework allows one to recast the problem of predicting a guess density by extrapolating information available from previous md steps as a mapping between two vector spaces, i.e., the space of molecular descriptors and the tangent plane. This geometric approach can be seen as an alternative to extrapolating quantities derived from the density, such as the Fock or Kohn–Sham matrix, as proposed by Pulay and Fogarasi [38] and by Herbert and Head-Gordon [22]. However, the framework we developed, using molecular descriptors and a general linear extrapolation technique, can in principle be easily extended to such approaches.

That being said, our choices of both the molecular descriptor and of the extrapolation strategies are far from being unique. In recent years, molecular descriptors gained attraction within the rise of machine-learning (ml) techniques. Our choice, namely, using the Coulomb matrix, is only one of the many possibilities, and while being simple and effective, more advanced descriptors may be used and possibly improve the overall performances of the method. We also used a straightforward (stabilized) least-square interpolation of the descriptors at previous point to compute the extrapolation coefficients for the densities. This strategy is, again, simple yet effective. However, many other approaches can be used. In particular, ml techniques may not only provide a very accurate approximated map, but also benefit of a larger amount of information (i.e., use the densities computed at a large number of previous steps), further improving the accuracy of the guess. Improvements on the descriptors and extrapolation strategies are not the only possible extensions of the proposed method. A natural extension that is under active investigation is the application to the g-ext guess to geometry optimization, for which the xlbo scheme cannot be used.

Overall, even the simple choices made in this contribution produced an algorithm that exhibits promising performances. In all our tests, the g-ext method outperformed the well-established xlbo technique, especially for tighter scf accuracies which may be relevant for post-scf bomd computations, including computations on excited-state pes. While we tested the method only for ks dft, it can also be used for Hartree–Fock or semiempirical calculations. The main disadvantage of the proposed strategy with respect to the xlbo method is, however, the lack of time reversibility, which manifests itself as a lack of long-term energy conservation. In particular, for longer md simulations, the total energy may exhibit a visible drift, which is something that the user must be aware of. In our test, the observed drift was relatively small and the use of a thermostat should be enough to avoid problems in practical cases, however, this is a clear, and expected, limitation of the proposed approach. We note that, using a tighter scf convergence, which is also the case where the proposed method shows its best performances, produces an energy conserving trajectory, even starting from very noisy conditions. A time-reversible generalization of the g-ext method is anyways particularly attractive, and is at the moment under active investigation.

Supporting Information Available

A Julia template of the g-ext algorithm is available at https://github.com/epolack/GExt.jl. The figure representing the total energy computation with an scf convergence threshold of for the molecule 3hf and formulas for the exponential and logarithm functions are available in the supplementary information.

Funding

Part of this work was supported by the French “Investissements d’Avenir” program, project ISITE-BFC (contract ANR-15-IDEX-0003).

References

- [1] (1999) Ab initio molecular dynamics, a simple algorithm for charge extrapolation. 118 (1), pp. 31–33. External Links: Document, ISSN 0010-4655 Cited by: §1, §2.3.

- [2] (1992) Ab initio molecular-dynamics techniques extended to large-length-scale systems. 45 (4), pp. 1538–1549. External Links: Document Cited by: §1, §2.3.

- [3] (2019-11) Atomic cluster expansion: completeness, efficiency and stability. External Links: Link, 1911.03550 Cited by: §2.1.

- [4] (2013) Machine-learning approach for one- and two-body corrections to density functional theory: applications to molecular and condensed water. 88, pp. 054104. Cited by: §2.1.

- [5] (1993) Density-Functional Thermochemistry. 3. The Role of Exact Exchange. 98 (7), pp. 5648–5652. Cited by: §3.

- [6] (2007-04) Generalized neural-network representation of high-dimensional potential-energy surfaces. 98 (14), pp. 146401 (en). Cited by: §2.1.

- [7] (2011) Neural network potential-energy surfaces in chemistry: a tool for large-scale simulations. 13, pp. 17930–17955. Cited by: §2.1.

- [8] (2015) Constructing high-dimensional neural network potentials: a tutorial review. 115 (16), pp. 1032–1050. Cited by: §2.1.

- [9] (2020) Molecular mechanisms of activation in the orange carotenoid protein revealed by molecular dynamics. 142 (52), pp. 21829–21841. External Links: Document Cited by: §3.

- [10] (2020) The Multiple Roles of the Protein in the Photoactivation of Orange Carotenoid Protein. 6 (1), pp. 187–203. External Links: Document, ISSN 24519294 Cited by: §3.

- [11] (2020) Polarizable embedding QM/MM: the future gold standard for complex (bio)systems?. 22 (26), pp. 14433–14448. External Links: Document Cited by: §1.

- [12] (2009-10) Permutationally invariant potential energy surfaces in high dimensionality. 28 (4), pp. 577–606. Cited by: §2.1.

- [13] (2008) Long-range corrected hybrid density functionals with damped atom-atom dispersion corrections. 10 (44), pp. 6615–6620. External Links: Document Cited by: §3.

- [14] (2018) Towards exact molecular dynamics simulations with machine-learned force fields. 9, pp. 3887. Cited by: §2.1.

- [15] (2017) Machine learning of accurate energy-conserving molecular force fields.. 3, pp. e1603015. Cited by: §2.1.

- [16] (2019-01) Atomic cluster expansion for accurate and transferable interatomic potentials. 99 (1), pp. 014104. Cited by: §2.1.

- [17] (1998-01-01) The Geometry of Algorithms with Orthogonality Constraints. 20 (2), pp. 303–353. External Links: Document Cited by: §2.2, Grassmann Exponential and Logarithm maps, Grassmann Exponential and Logarithm maps.

- [18] (2016) On the existence of the optimal order for wavefunction extrapolation in Born-Oppenheimer molecular dynamics. 144 (24), pp. 244103. External Links: Document Cited by: §1.

- [19] (2021) From crystallographic data to solution structure of photoreceptors: the case of appa bluf domain. Note: Submitted Cited by: §3.

- [20] (2021-04) The role of feature space in atomistic learning. 2 (2), pp. 025028. External Links: Document Cited by: §2.1.

- [21] (1972) Self — Consistent Molecular Orbital Methods . XII . Further Extensions of Gaussian — Type Basis Sets for Use in Molecular Orbital Studies of Organic Molecules Publishing Articles you may be interested in Self ‐ consistent molecular or. 56 (5), pp. 2257–2261. Cited by: §3.

- [22] (2005) Accelerated, energy-conserving Born–Oppenheimer molecular dynamics via Fock matrix extrapolation. 7 (18), pp. 3269–3275. External Links: Document Cited by: §1, §4.

- [23] (1994) Exponential transformation of molecular orbitals. 101 (5), pp. 3862–3865. External Links: Document, ISSN 0021-9606 Cited by: §1.

- [24] Interfacing to gaussian 16 (v2). Note: https://gaussian.com/interfacing/Last accessed: 1st June 2018 Cited by: §3.

- [25] (2017) Hybrid QM/MM molecular dynamics with AMOEBA polarizable embedding. 13 (9), pp. 4025–4033. External Links: Document Cited by: §1, §3.

- [26] (2019) Towards large scale hybrid QM/MM dynamics of complex systems with advanced point dipole polarizable embeddings. 10 (30), pp. 7200–7211. External Links: Document Cited by: §1, §3.

- [27] (2015) Neural network-based approaches for building high dimensional and quantum dynamics-friendly potential energy surfaces. 115 (16), pp. 1012–1020. Cited by: §2.1.

- [28] (1960-04) Some recent advances in density matrix theory. 32, pp. 335–369. External Links: Link, Document Cited by: §3.1.

- [29] (2009) Extended Lagrangian Born–Oppenheimer molecular dynamics with dissipation. 130 (21), pp. 214109. External Links: Document Cited by: §1.

- [30] (2006-09) Time-reversible born-oppenheimer molecular dynamics. 97, pp. 123001. External Links: Link, Document Cited by: §1.

- [31] (2008) Extended Born-Oppenheimer Molecular Dynamics. 100 (12), pp. 123004. External Links: Document Cited by: §1, §3.1.

- [32] (2017) Next generation extended lagrangian first principles molecular dynamics. 147 (5), pp. 054103. External Links: Document Cited by: §1.

- [33] (2020) Density-matrix based extended lagrangian born–oppenheimer molecular dynamics. 16 (6), pp. 3628–3640. External Links: Document Cited by: §1.

- [34] (2021) An enhanced sampling QM/AMOEBA approach: the case of the excited state intramolecular proton transfer in solvated 3-hydroxyflavone. 154 (18), pp. 184107. External Links: Document Cited by: §1, §3.1, §3.

- [35] (2020) Excited state born-oppenheimer molecular dynamics through a coupling between time dependent DFT and AMOEBA. 22, pp. 19532–19541. External Links: Document Cited by: §1, §1, §3.

- [36] (2020) An approximation strategy to compute accurate initial density matrices for repeated self-consistent field calculations at different geometries. 118 (19-20), pp. e1779834. External Links: Document, ISSN 0026-8976 Cited by: §1, §2.2.

- [37] (2010) Current status of the AMOEBA polarizable force field. 114 (8), pp. 2549–2564. External Links: Document Cited by: §3.

- [38] (2004) Fock matrix dynamics. 386 (4), pp. 272–278. External Links: Document Cited by: §1, §4.

- [39] (2018) Tinker 8: software tools for molecular design. 14 (10), pp. 5273–5289. Cited by: §3.

- [40] (2012-02) Fast and accurate modeling of molecular atomization energies with machine learning. 108 (5), pp. 058301 (en). Cited by: §2.1.

- [41] (2012) Fast and accurate modeling of molecular atomization energies with machine learning. 108, pp. 058301. Cited by: §2.1.

- [42] (2003) An efficient orbital transformation method for electronic structure calculations. 118 (10), pp. 4365–4369. External Links: Document Cited by: §1.

- [43] (2005) Quickstep: Fast and accurate density functional calculations using a mixed Gaussian and plane waves approach. 167 (2), pp. 103–128. External Links: Document Cited by: §1.

- [44] (2019) Manifold interpolation and model reduction. Note: http://arxiv.org/abs/1902.06502 Cited by: §2.2, Grassmann Exponential and Logarithm maps, Grassmann Exponential and Logarithm maps.

Supplementary figure

Grassmann Exponential and Logarithm maps

The Grassmann manifold is a differential manifold and, for any given with and for fixed , the tangent space is characterized by

| (13) |

Note that the tangent space is a linear space. One can then introduce the Grassmann exponential which maps tangent vectors on to the manifold in a locally bijective manner around . Indeed, it is not only an abstract tool from differential geometry, but it can be computed in practice involving the matrix exponential. By complementing with orthonormal columns to obtain , where denotes the group of orthogonal matrices of dimension , and we have

| (14) |

Here, denotes the matrix exponential function, the matrix contains expansion coefficients of columns of in a span of columns of such that and are the first columns of the identity matrix. As described in [17, 44], the Grassmann exponential can then be equivalently expressed by

| (15) |

by means of a singular value decomposition (SVD) of the matrix .